These medical miracles are changing what science thought possible

There are many of us who are so attached to our smartphones that they might as well be part of us.

As Elon Musk once noted, “How much smarter are you with a phone or computer or without? You’re vastly smarter, actually. You can answer any question pretty much instantly. You can remember flawlessly. Your phone can remember videos, pictures perfectly. Your phone is already an extension of you. You’re already a cyborg.”

But brain-computer interfaces, which interpret electrical signals from the human brain and turn them into real, physical actions, are a different beast altogether. As Musk’s Neuralink and others leading the charge in brain-computer interfaces have found, translating the complexities of the human mind is a complex challenge. Given the medical breakthroughs brain-computer interfaces are achieving, though, it’s a challenge well worth taking head-on.

Restoring Movement

The market for brain-computer interfaces is growing at a staggering 13.1% year-over-year according to Grand View Research, and will be worth nearly $4 billion by 2028. A big reason for that growth is the primary use case in aiding patients with neurological disorders, which affect around 1 billion people worldwide to some degree.

BrainGate has allowed patients suffering from ALS, stroke, and spinal cord injuries to control computer cursors with their thoughts. By simply imagining their hands moving the cursor while their brains were hooked up to BrainGate’s interface of micro-electrodes hooked up to the brain, patients with tetraplegia (full or partial loss of use of all four limbs) were able to reach targets on a computer screen with calibration times of less than a minute.

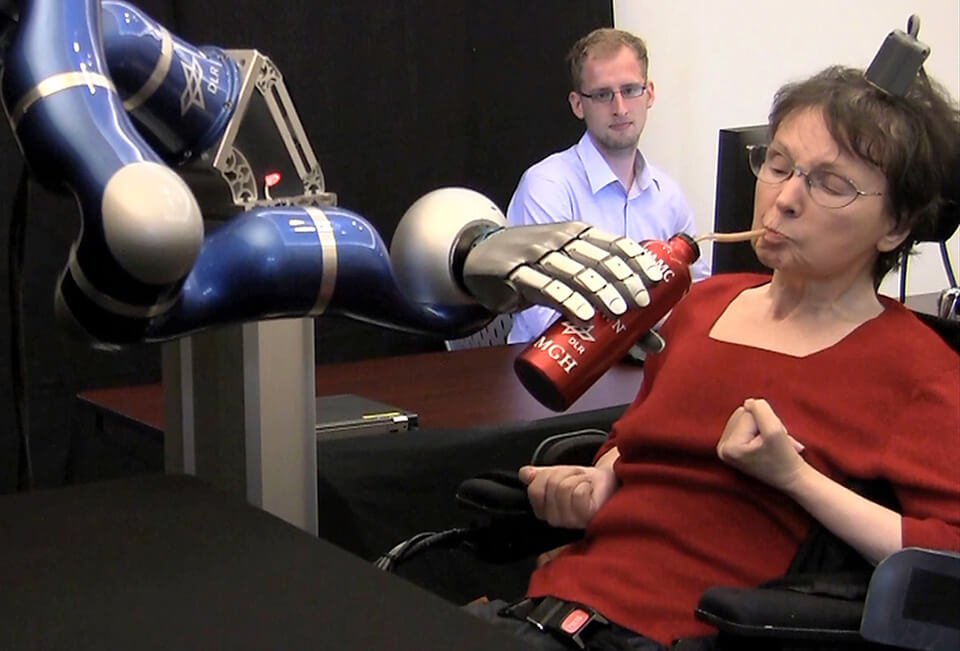

In the past few years, our team has demonstrated that people with tetraplegia can use the investigational BrainGate BCI to gain multidimensional control of a robotic arm, to point-and-click on a computer screen to type 39-plus correct characters per minute, and even to move their own arm and hand again — all simply by thinking about that movement,” Dr. Leigh Hochberg, director of the BrainGate consortium, told Science Daily.

At The University of Southern California’s Center for Neural Engineering, brain research is being conducted to create a memory prosthesis that could take the place of the hippocampus in damaged brains, such as those of people suffering from Alzheimer’s disease. The prosthetic uses people’s memory patterns to help the brain encode and recall memory. Studies have shown a 37% increase over baseline in episodic memory, new information that is stored for a short time. Episodic memory is often lost in patients with Alzheimer’s, stroke, and head injury.

Some devices are powerful enough they allow users to not just operate computer mouses but prosthetic limbs. Invictus BCI uses machine learning and sensor technology to “restore near natural functionality,” the company says.

“Simply put,” Eeshan Tripathii who co-founded Invictus with his sister Vini, who had a hand amputated, told MIT News, “we’re building a device that decodes your muscle and brain signals and translates them into commands that a prosthetic hand can use to make gestures and grasp objects. We aren’t building the prosthetic hand; we’re building the interface that controls the hand, and we’re doing it completely non-invasively.”

Complex Language

It’s the non-invasive part that might be the siblings’ biggest feat. The basic components of a brain-computer interface include a receiver that measures brain activity, usually in the form of a chip that needs to be implanted in the brain, or a headset. Implanting chips requires complex and potentially dangerous surgery that only specialized neurosurgeons can perform. It’s expensive, and it’s risky.

The second component is a computer using rare and specialized software that process and interpret signals from the brain.

Last is a receptor, something the user can control that receives the instructions and turns them into action. Those are typically virtual keyboards or robotic limbs.

“The success of BCIs hinges on our ability to decode the complex language of the brain and translate it into actionable commands. By leveraging advances in machine learning, neural decoding algorithms, and neuroimaging techniques, we can enhance the accuracy, speed, and reliability of BCIs, bringing us closer to seamless brain-machine communication,” the late neuroprosthetics pioneer Dr. Krishna Shenoy wrote.

It’s not really reading a person’s mind, Dr. Miguel Nicolelis told CNN, rather it’s using algorithms to look for patterns in electrical signals the brain sends out. Using a brain-controlled exoskeleton that Nicolelis designed, a paraplegic man performed the ceremonial opening kick at the 2014 World Cup in Nicolelis’ native Brazil. In the decade since, technology and results have improved by leaps and bounds.

Remaining Hurdles

Even with those advances, there’s little about brain-computer interfaces that comes easily. The algorithms and software that can make sense of our brain signals are hard to build, requiring a great deal of technical skill.

Users need to undergo rigorous training to use the devices. BrainPort Technologies’ training, for example is 10 hours over three days. Its Vision Pro uses a video camera attached to a headband to translate digital information into electrical patterns on the tongue. Blind users “see” with their tongues by interpreting bubble-like patterns to determine the size, shape, location, and motion of objects around them. Putting all their brain power into getting the interfaces to do what they want is taxing, requiring users to rest often.

The ethical responsibilities involved with handling sensitive personal data become infinitely more complex when you’re dealing with the innermost workings of people’s minds via devices connected to their brains.

But when it lets a parent with ALS send the message, “I love my cool son,” it all seems worth it.

Leave a Reply